Abstract

Intelligent process control and automation systems require verification authentication through digital or handwritten signatures. Digital copies of handwritten signatures have different pixel intensities and spatial variations due to the factors of the surface, writing object, etc. On the verge of this fluctuating drawback for control systems, this manuscript introduces a Spatial Variation-dependent Verification (SVV) scheme using textural features (TF). The handwritten and digital signatures are first verified for their pixel intensities for identification point detection. This identification point varies with the signatureâs pattern, region, and texture. The identified point is spatially mapped with the digital signature for verifying the textural feature matching. The textural features are extracted between two successive identification points to prevent cumulative false positives. A convolution neural network aids this process for layered analysis. The first layer is responsible for generating new identification points, and the second layer is responsible for selecting the maximum matching feature for varying intensity. This is non-recurrent for the different textures exhibited as the false factor cuts down the iterated verification. Therefore, the maximum matching features are used for verifying the signatures without high false positives. The proposed schemeâs performance is verified using accuracy, precision, texture detection, false positives, and verification time.

Similar content being viewed by others

Introduction

Handwriting verification or recognition process is mostly used in various fields. Handwriting verification ensures the userâs security and the organizationâs safety from unknown attackers1. Handwriting verification is complicated in every application that provides feasible information to further security policies2. Various handwriting verification methods are used in the control system that first captures the contentâs core or sequence3. Multi-semantic fusion method is widely used in handwriting verification and prediction processes. The semantic method detects spatial and temporal features of handwriting that produce optimal data for the verification process4,5.

Handwriting identification is the process of determining the identity of a handwritten documentâs author. Given the major role of handwriting features in the writerâs classification process, the classifier accuracy is very sensitive to the weight given to each writerâs score in the feature set. This communication aims to understand better how feature selection and weight play into the handwriting recognition challenge. There are three main reasons for the problems that are occurring: (i) Handwriting recognition is mostly based on subjective experience, with small help from objective statistical methods; (ii) there are not any unified, all-encompassing evaluation standards; and (iii) experts need to get better at their tasks.

Texture feature-based handwriting signature verification methods are commonly used in various systems6. Texture features enhance the signature verification processâs accuracy, and maximizes the systemsâ performance and effectiveness levels7. The feature extraction technique identifies the signatureâs texture features and handwriting8. The support vector machine (SVM) model is used to verify handwriting signatures presented in the database and detects the important texture features9. Local binary patterns (LBP) and greyscale levels of signatures are verified based on certain functions10.

Artificial intelligence (AI) algorithms are used in handwriting signature verification to improve accuracy and reduce workload in the verification process11. The artificial neural network (ANN) approach is mostly used in signature verification systems12. Slops and patterns provide necessary information for the verification process that reduces latency in both classification and Identification13. A deep CNN is used for handwriting signature verification improving the systemsâ performance and efficiency14,15.

The main objective of the paper is:

-

To design the Spatial Variation-dependent Verification (SVV) scheme using textural features (TF) for handwriting identification and verification in intelligent process control and automation systems.

-

Extracting textural features using a convolutional neural network for identifying points to prevent cumulative false positives.

-

The experimental results have been executed, and the proposed scheme attains high accuracy, precision, texture detection, false positives, and verification time compared to existing methods.

Related works

Li et al.16 designed an adversarial variation Network (AVN) model for handwritten signature verification. AVN detects the effective features that are presented in given data. The proposed AVN model maximizes verification accuracy by 94%, enhancing the systemsâ performance and feasibility. However, the performance reduction speed of the technique is much slower than the baseline technique, particularly when the variation is not enormous.

Ma et al.17 introduced a transformer deep learning model for sequence learning is a quantitative approach that analyzed the tremor symptoms of signatures. The introduced model improves efficiency by 97.8% and an accuracy ratio of 95.4% in the validation and verification process. However, this study has gathered enough handwriting data from ET patients.

Zhao et al.18 developed a deep CNN based framework to identify calligraphy imitation (CI). The main aim of CNN is to detect the CI features and patterns available in handwritten signatures. Results show that the proposed CNN framework enhances verification accuracy by 96.8% and maximizes system performance. However, CNN-based methods need many handwriting samples from the writer.

Yang et al.19 proposed an unsupervised model-based handwriting posture prediction method. The proposed method achieves a high accuracy of 93.3% in posture prediction of 76.67%, improving the systemsâ significance and effectiveness levels. However, the dimensionality reduction data acquired by executing the t-SNE method with the same parameters is distributed differently, necessitating further iterations of the chosen distribution map as the experimental data.

Al-Haija et al.20 designed a handwriting character recognition method based on the ShuffleNet CNN. CNN analyzed the datasets that are presented in the database. Compared with other methods, the proposed ShuffleNet CNN-based method maximizes the accuracy of 99.50% in character recognition. However, the sample size utilized in this study is small.

Ruiz et al.21 developed an offline handwritten signature verification method using a Siamese NN (SNN). The proposed method increases accuracy by 99.06% in the signature verification process, improving the systemsâ efficiency. Limitation of SNN models is that they necessity huge amounts of labeled data for training, and accessible signature databases are usually small (concerning the no. of original signatures per writer).

Xue et al.22 introduced an attention-based two-pathway densely connected convolutional network (ATP-DenseNet) for gender identification of handwriting. A convolutional block attention module (CBAM) extracts the word features from signatures.Results show that the proposed ATP-DenseNet method achieves high accuracy by 66.3% in gender identification. Cropping the word pictures for ATP-DenseNet is difficult for papers with a lot of cursive writing, and the results are impacted by the cropping strategy used for the word images.

Hamdi et al.23 proposed a new data augmentation method for multi-lingual online handwriting recognition (OHR) systems. The geometric method is mostly used in the augmentation method that predicts the online handwriting trajectory (OHT). The proposed method improves the effectiveness of the systems by increasing accuracy by 97.2% in the recognition process. Some labels in the database contain a limited no. of samples.

Bouibed et al.24 developed a SVM based writer retrieval system for handwritten document images. SVM classifier is used here to classify the documents based on certain conditions and patterns. Results show that the proposed enhances the performance and feasibility of the systems by 96.76%. However, documents must be sorted from most to least similar to accomplish the writerâs recovery.

Alpar et al.25 introduced a new online signature verification framework using signature barcodes. The introduced framework maximizes the verification accuracy by 97.9%, improving the online systemsâ efficiency and reliability. However, this method requires the selection of an optimal wavelet among many alternative features.

Maruyama et al.26 designed an intrapersonal parameter optimization method for offline handwritten signature augmentation and used in an automatic signature verification system (ASVS). The goal of the ASVS is to increase the accuracy of signature sample and predicts the writerâs variability and features. The proposed method improves the performance by 95.6% and the effectiveness range of ASVS. However, optimizing parameters may not produce more compact clusters in a feature space like the Critical carE Database for Advanced Research (CEDAR) dataset.

Zenati et al.27 presented a signature steganography document image system using beta elliptic modeling (SSDIS-BEM). A binary robust invariant scalable Keypoint (BRISK) detector is used here that detects the exact positions and identities of signatures. Compared with other methods, the proposed SSDIS-BEM maximizes accuracy by 84.38% in the signature verification process. However, lossy compression is not as stable since the discrete cosine transform (DCT) compression technique significantly alters the pixel intensities of embedded documents, making lossy compression less effective.

Wei et al.28 introduced an online handwritten signature verification based on the sound and vibration (SVSV) method for online systems. The SVSV method increases signature verification accuracy by 98.4%, improving online systemsâ performance and efficiency. However, the robustness of the signature at various positions is considerably lower.

Cadola et al.29 proposed a collaborative learning-based teaching approach for forensic students for the learning process, which provides effective strategies to verify signatures. The proposed approach maximizes the overall efficiency of 93.4% of the learning process for forensic students. However, some characteristics remained problematic to forge. (e.g., loops or angles and commencements or terminations).

Houtinezhad et al.30 developed a feature extraction fusion (FEF) based writer-independent signature verification method. Canonical correlation analysis (CCA) is used here to analyze the discriminative features presented in a signature. The proposed method enhances the performance and reliability of systems by increasing accuracy by 86% in the verification process. Limitations include relying on a single reported visual representation and lacking further data for authentic and forged signatures.

Zhou et al.31 proposed the Dual-Fuzzy (DF-CNN) for handwritten image recognition. This study shows the calculation process, including estimates for forward propagation, backward propagation, and changing parameters. The DF-CNNâs optimization method is given so that its best results can be found. The DF-CNN and its optimization method are used to solve a real problem: recognizing writing numbers. The calculation process and the comparison show that the suggested new model and method are both feasible and beneficial. However, the sample images used in this study are small in numbers.

Ponce-Hernandez et al.32 suggested the Fuzzy Vault Scheme Based on Fixed-Length Templates for Dynamic Signature Verification. Fifteen global parts of the signature are used to make the models. The success of the suggested system is measured using three databases: a private collection of signatures and the public databases MCYT and BioSecure. The testing results show that the evaluation performance is higher than existing models. The high time inefficiency of this technique arises from the fact that it must assess several candidatesâ polynomials for each authentication attempt.

Abdul-Haleem33 created an offline signature verification system that utilized a combination of local ridge characteristics and additional features derived by using the two-level Haar wavelet transformation. Each wavelet sub-band image is divided into overlapping blocks, local characteristics and wavelet energies retrieved from each block. For verification, the systemâs FRR was 0.025% and its FAR was 0.03%. The varying choices of block lengths and overlapping ratios have a significant impact on the recognition rate.

According to the various researcherâs, handwriting is recognized by applying various neural networks and machine learning techniques. These techniques consume high computation time and face difficulties while identifying the different writing styles. Some methods require substantial data, challenges with dimensionality reduction or the need for optimal wavelet selection as listed in Table 1. Among all the literatures reviewed above the three existing methods such as SV-SNN21, AVN16, and SVSV28 are enclosed for comparison purpose. The research difficulties are overcome by applying the spatial variation-dependent verification scheme.

Proposed spatial variation-dependent verification (SVV) scheme

Problem statement

A personâs handwriting evolves and changes, making it a behavioral biometric. It requires cooperation between the brainâs motor effectors (the hands) and the bodyâs sensory organs (the eyes). The coordination of these systems enables humans to create intricate ink patterns and sequences. To create reliable writer identification systems, scientists have studied the behavioral side of writing styles, or âhandwriting biometricsâ. For decades, scientists have studied handwriting as a proxy for personality. Multiple disciplines have a common fascination with a personâs handwriting. Forensic scientists, psychologists, and palaeographers are all examples. Both the character style and the literary style vary considerably from one another. Authorship of a handwritten document may be determined by a procedure called handwriting identification. Three stages are involved in establishing authorship from the handwritten text: data collecting and preprocessing, feature extraction, and classification. Obtaining features that accurately represent the many types of handwriting is the primary challenge in handwriting recognition. Although several feature extraction methods have been shown in the research and put into practice for handwriting recognition, the literature does not provide enough information to fully analyze the significance of every given feature in handwriting recognition.

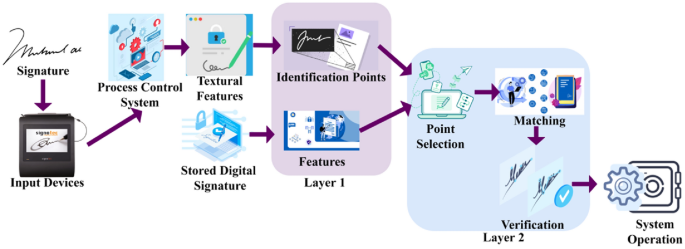

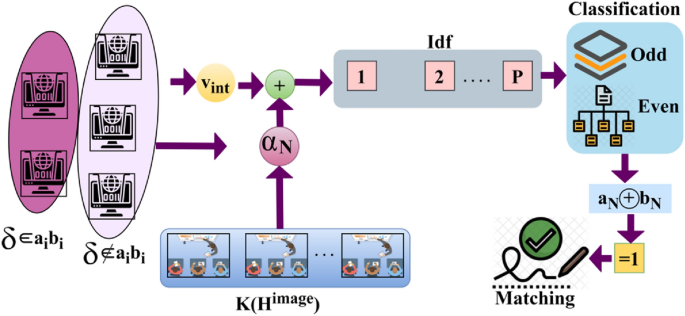

This study proposes a SVV scheme using TF. First, the pixel intensities used to identify identification points in handwritten and digital signatures are checked for accuracy. This distinguishing feature varies depending on the signatureâs design, location, and texture. To confirm the matching of textural features, the selected spot is digitally signed and placed on a spatial map. The derived textural characteristics are used between two consecutive identification locations to avoid accumulating false positives. A CNN aids this layered analytical method. The first layer produces New Identification, while the second layer chooses the most optimal matching feature for intensity changes. The proposed SVV scheme defines the Identification and verification of handwriting signatures to ensure better textural feature extraction in centralized intelligent process control systems. The handwritten texts generally contain a unique writing style for each individual. Distribution of these handwritten signatures is used for defining writing style. The influencing textural features, such as pixel intensities and spatial variations, are detected by handwriting verification synchronized for identifying the writerâs sex and writing style. It ensures the handwritten and digital signatures for identification point detection. In a heterogeneous environment, the handwritten image \({H}^{image}\) is serving input through the device for recognition and verification, for writer identity \(Wr\). Figure 1 presents the proposed schemeâs process.

The texture detection is modeled for the feature-matching process and the handwritten signatures are verified for heterogeneous writers in that the control system consists of female \(F\), male \(M,\) and other genders \(O\). The handwritten and digital signatures are verified using two successive identification points based on a convolutional neural network. Identification points are distinguishing characteristics of an individualâs handwriting signatures. They are essential for validating and identifying between different authors. Detecting these different signals ensures signature verification accuracy and precision. Matching identifying points reduces \(FP\), which contributes to more reliable findings.

Let \(P\) represent the process control system consisting of \(K\left({H}^{image}\right)\) handwritten signatures in the available control system for handwriting verification the input device \({in}^{d}\) generates a handwritten signature is expressed as:

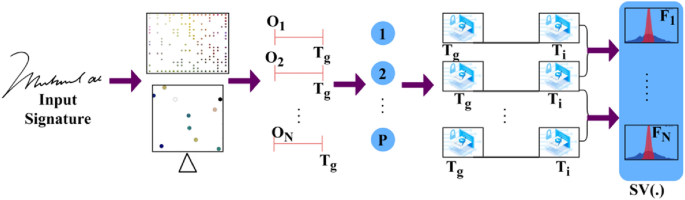

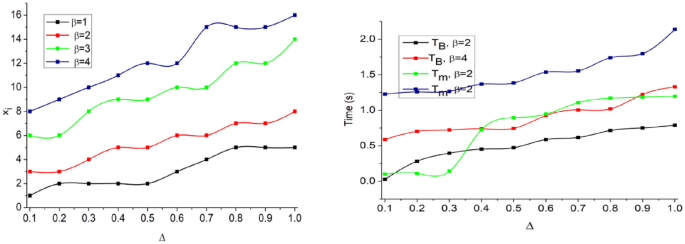

Equation (1) computes \(SV(.)\) as the spatial variations, \(\Delta \) varying pixel intensities, \(VT\) is the time for verifying the handwritten signature with input devices as per Eq. (2). The variable \({T}_{g}\) is the handwritten or digital signature generation time, \({T}_{i}\) is the overall time for identifying handwriting and \({T}_{m}\) is the identification point and feature matching time.

\(N<{H}^{image}\) is to satisfy all the digital and handwritten signatures from the input device verified at any time \(VT\). This authentication controls the anonymous changes the hacker or any other person performs during the confidential process. The authentication process is a security featurre that ensures the reliability of the network and the information that is being processed by allowing only authorised and legitimate changes while detecting and mitigating unauthorised or malicious changes. This is extremely crucial in systems that use AI to maintain process correctness and reliability. The spatial variation estimation process is illustrated in Fig. 2.

The input is first segregated along \(x\) and \(y \forall \Delta \in {O}_{1}\) to \({O}_{N}\) occurs for \({T}_{g}\) alone \(\in P\) provided \(\Delta \) is high/low, depending on the observed \({T}_{g}\). \({F}_{1}\) to \({F}_{N}\) is validated for extracting the variations and the spatial lookups match the input with the stored ones. Consider the approximate total of \(n=2500\) for the special textural feature of letters. For the digital copies of handwritten signatures using identification points, as in Eq. (3):

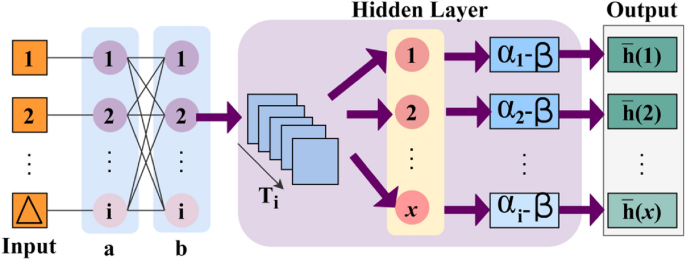

Equation (4), \({idf}_{p}\left(x\right)\) denotes identification pointâs detection in a CNN for generating new:

Equation (5), \({\alpha }_{i}\) means stored digital signature, \({x}_{i}\) means extracted features, and \(FP\) means false positive:

Equation (6) shows, \({b}^{i}=a\left({x}_{i}=1/{Wr}_{1}\right)\) indicates the \(ith\) textural feature computation with a probability of a male writer \({b}^{i}\ne \left\{\mathrm{0,1}\right\}\); \({a}^{i}=b\left({x}_{i}=1/{Wr}_{2}\right)\) is the \(ith\) textural feature computation with a probability of a female writer \({a}^{i}\ne \left\{\mathrm{0,1}\right\}\) and \({a}^{i}{b}^{i}=ab\left({x}_{i}=1/{Wr}_{3}\right)\) indicates the \(ith\) textural feature computation with a probability of other gender writers \({a}^{i}{b}^{i}\ne \left\{\mathrm{0,1}\right\}\). If \(\beta \) represents identifying special characters. The number of features of handwritten text \(L\) is the serving inputs to the devices and the identification points in CNN are expressed as in Eq. (7):

In this first layer, the generating new identification points and their varying features are analyzed using CNN with the already stored digital signature. The CNN process for identification points is presented in Fig. 3.

The input \(\forall \Delta \) is validated for \({a}_{i}{b}_{i}\) across \({T}_{i}\); conceals \({T}_{g}\) and \({T}_{m}\) such that \(SV\left(.\right)\) is detected. In the \(SV(.)\) detection process, \(x\) is the key factor for detecting identification points present as \(\left({\alpha }_{i}+{x}_{i}\right)\) that causes \(FP\). Therefore, the \(\beta \) from different \(i\) instances are validated for preventing \(FP\) and generates \(\overline{h }(x)\) output with precise identification points (Fig. 3). The detection of identification points are eligible to match textural feature with the \(K\left({{H}^{image}}^{P}\right)\) depending on the authentication using \(L\) as in Eq. (8):

The writers identify the identification point to verify the textural feature matching, if \(N<{H}^{image}\) then \({\left({H}^{image} \right)}^{N}\). This process is distinct for the \(N={H}^{image}\), \(N>{H}^{image}\) and \(N<{H}^{image}\) conditions, if \(N<{H}^{image}\) is modeled as a layered analysis for identifying handwriting based on verification time is similar for all the writers irrespective of \(N\) and \(VT\). In essence, Case 1 and Case 2 are utilized to categorize and address various operating circumstances within the proposed SVV-TF. They aid in the definition of how the system adjusts and manages identification points for proper verification. Case 1 describes a situation in which the number of created identification points equals the count of handwritten signatures. Itâs a circumstance in which each generated point may be identical using a specific signature, resulting in a simple and effective verification method. Case 2 refers to situations in which the total quantity of computed identification points is smaller compared to the count of handwritten signatures. This situation is further subdivided into scenarios in which the number of points in respect to the total amount of signatures is even or odd. Specific algorithms are used to manage these cases, ensuring reliable verification despite the fact the total quantity of elements and signature differ.

Case 1 The \({H}^{image}\) count is the same as the no. of generated identification points.

Analysis 1 This is the ideal case for all the writers where the efficiency of the generated identification point is not matched \(K\left({{H}^{image}}^{P}\right)\) then completely cut down the iterated verification. Here, the first level is responsible for processing and generating new identification points based on \(N\) or \({H}^{image}\). \({F}_{F}\) represents the false factor identified by the input device that serves as the root of the second layer. The notion of false factor most likely refers to inaccuracies or erroneous information added throughout the identification as well as verification procedure. The \({F}_{F}\) denotes situations in which the system wrongly recognizes an individual or fails to identify their identification points. Reduced \({F}_{F}\) is critical for increasing handwriting recognition system accuracy and dependability.

The output of \({F}_{F}\) as \(\left\{K\left({{H}^{image}}^{1}\right), K\left({{H}^{image}}^{2}\right),\dots K\left({{H}^{image}}^{P}\right)\right\}\) is assigned to the individual writers. In the textural feature extraction, \(\forall N={H}^{image}\), the pursuing writerâs handwriting is matched with the already stored signatures in the following manner shown in Eq. (9):

where \(\delta \) is the generated point selection based on the writing style of the writer, is identified without false factor and matches its spatial variations and pixel intensities \({a}_{i}^{{F}_{F}}\) and \({b}_{i}^{{F}_{F}}\) with stored signatures.

Case 2 The \({H}^{image}\) the count is less than the identification point generated (i.e.) \(N<{H}^{image}\).

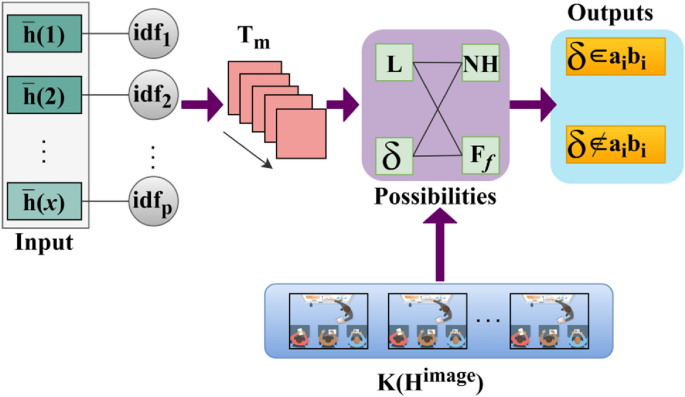

Analysis 2 The role of digital or handwritten signatures and Identification of the writer makes it reliable for reducing the chances of a security vulnerability without maximizing the computation complexity. Therefore, \(N<{H}^{image}\) such that \(\frac{{H}^{image}}{N}=even \,or\, odd\) for which the selection point satisfies maximum matching, point selection considering the above cases through the CNN is presented in Fig. 4.

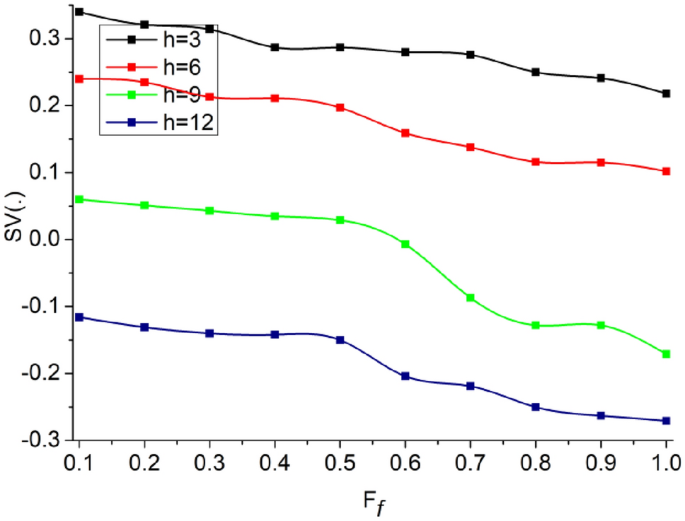

The first layerâs input (i.e.) \(\overline{h }(1)\) to \(\overline{h }(x)\) is fed as input for \(id{f}_{p}\) provided the analysis is performed in \({T}_{m}\) alone. This reduces the actual time required for preventing \((N-H)\) occurrence \(\forall {F}_{f}\). Therefore with the available \(\Delta \) and \(K\left({H}^{image}\right)\) the possibilities of \(L \& \delta \) are validated for \(\left(N-H\right)\) and \({F}_{f}\). In this possibility, \(\delta \in {a}_{i}{b}_{i}\) and \(\delta \notin {a}_{i}{b}_{i}\) is classified as output. The selection points \(\forall \delta \in {a}_{i}{b}_{i}\) are used for confining \(SV\left(.\right)\) (Fig. 4). In this case \(\frac{{H}^{image}}{N}=odd/even\), after the sequential feature matching waiting for the handwriting signature verification \((VR)\) and then performing system operation. This process is estimated as \([(VR-L)/|{F}_{f}|+1]\) and \(|FP|\) is the maximum false rate occurrence identification in the second layer. Here, \(\forall 1\le N<{H}^{image}\), the maximum matching feature for varying intensity is expressed as:

Equation (10) estimates the precise digital or handwriting signature verification based on the sequence of textural feature extraction whereas the identification point and texture feature does not match \(\frac{{H}^{image}}{N}=odd\). On the other hand, the signature verification is different in this case \(\frac{{H}^{image}}{N}=even\) (i.e.) \(Wr-2({H}^{image}-N)\) is the considered instance for verification expressed as:

Equation (11) indicates the minimum possible computation required for handwritten Identification and verification. In this series, the handwritten signature verification process time as varying by the above condition \({T}_{g}\) and \(VT\) instance. The matching process for signature verification is illustrated in Fig. 5.

The CNN-classified outputs are used for \({v}_{int}\) differentiation from \(K\left({H}^{image}\right)\) such that any of \({V}_{int}\oplus {\alpha }_{N}\) achieves \(P\in Idf\). Depending on the availability, the even/ odd classification is observed from which \({a}_{N}\oplus {b}_{N}\) is performed. In the above process is the maximum (i.e.) \({a}^{i}{b}^{i}=1\), then matching is successful (Fig. 5). In Fig. 6, the analysis of \({T}_{g}\) and \({T}_{m}\), and \({x}_{i}\) for the varying \(\Delta \) is presented.

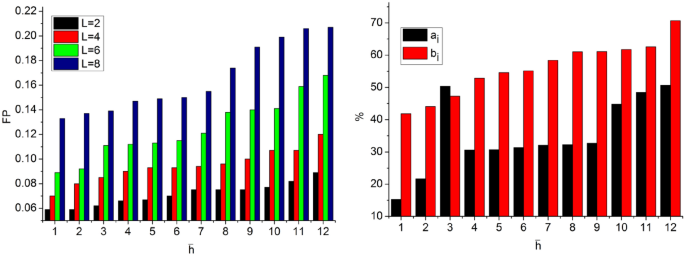

In the proposed scheme the \({T}_{g}\) and \({T}_{m}\) demands are variable depending on the \(\beta \) occurrence. If \(\beta \) occurrence is high, then \(\left(N-H\right)\) becomes invariable such that \(\overline{h }(x)\) increases. Therefore the \(SV(.)\) is suppressed under controlled CNN layers. Precisely the first layer denies the \(FP\) due to \({a}^{i}{b}^{i}\notin 1\) and hence \({T}_{g}\) is restricted then \({T}_{m}\). The \({x}_{i}\) increases with the \(\beta \) for which \(\delta \) and matching are precise. Based in the available \(\Delta \) and \(SV(.)\) classification, the \({\alpha }_{i}\) is distributed. The distributions are classified for \((N-H)\) and \({F}_{f}\) such that either of \(\delta \left(\in {a}_{i}{b}_{i} or \notin {a}_{i}{b}_{i}\right)\) is the output. If the output is an \(FP\), then \({x}_{i}\) increases and therefore, CNNâs layer 1 process is repeated. An analysis of \(FP, {a}_{i}\) and \({b}_{i}\) for the varying \(\overline{h }\) is presented in Fig. 7.

The analysis of \(FP\) varies with \(L\) as the \(\delta \in {a}_{i}{b}_{i}>\delta \notin {a}_{i}{b}_{i}\). In this process, \(\beta \) are omitted to satisfying \({a}_{i}{b}_{i}=1 \forall {a}_{N}\oplus {b}_{N}=1\). Therefore as \(FP\) increases, the \(L\) increases for confining them in consecutive iterations. As the iterations from layer 2 to layer 1 of the CNN are confined (repeated) the \({a}_{i}>{b}_{i}\) occurs (randomly), else the variations are less such that \({a}_{i}<{b}_{i}\) is the actual output (Refer to Fig. 7). The analysis of \(SV(.)\) for varying \({F}_{f}\) and \(\overline{h }\) is presented in Fig. 8.

The proposed scheme identifies \(FP \forall {\alpha }_{i}\notin \left\{\mathrm{0,1}\right\}\) such that \(SV\) occurs. This is due to the \(\overline{h }\) occurrence, and therefore, new \(L\) is required for confining \(FP\). Therefore the considered \(VT\) is used for confining \(SV(.)\) for \({L}_{N-H}\) and \({F}_{f}\) variations. Therefore the considered intervals of \({T}_{i}\) (without \({T}_{m}\)) is used for preventing \(FP\) that does not require \(SV\left(.\right)\) balancing (Fig. 8).

Performance analysis

Imagery data from Ref.34 and the Handwritten Hebrew Gender Dataset35. In this dataset, genuine and fraud signatures are classified in 42 directories providing 504 testing inputs. The training set is randomly obtained from 128 directories containing 8 to 24 images. With this input, 16 textural features and a (0.1â1) intensity range is varied for analysing accuracy, precision, feature detection, false positives, and verification time. In the comparative study, the methods SV-SNN21, AVN16, and SVSV28 are enclosed with the proposed SVV-TF scheme. Sample input and output representing the key processes of the above discussion are tabulated in Tables 2 and 3. The signatures that are used for the analysis are from the authors.

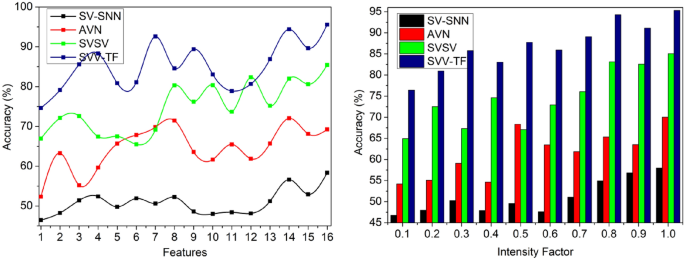

Accuracy

In Fig. 9, the term âintensity factorâ most likely refers to the varied pixel intensities or brightness levels in a handwriting or digital signature. These changes in the intensity of pixels are inspected and analyzed within the framework of the process of identifying and confirming the handwriting, helping to recognize various textural aspects. The intensity factor may have an effect on the handwriting recognition systemâs accuracy and precision. The spatial variation in handwritten signatures is identified with \(\sum_{i=1}^{P}{T}_{g}-\left(1-\frac{{T}_{m}}{{T}_{i}}\right)\), the pixel intensities of the signature texture and outputs in point selection.

Precision

The variations in pixel intensities are identified for verifying the textural feature matching by performing the precise system operation based on the given handwritten signatures for identifying the difference between the stored digital signature and the current signature. In the first layer, the identification point is detected for recognizing the acute writer, and its writing style is deployed for identifying the spatial variations represented in Fig. 10. Therefore, the first and second levels are analyzed for accurate handwriting verification due to the surface and writing object changes being high precision for verifying the textural feature matching.

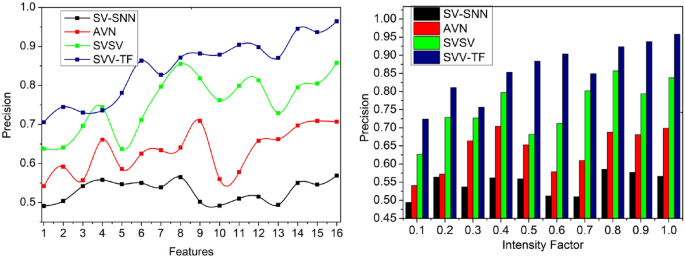

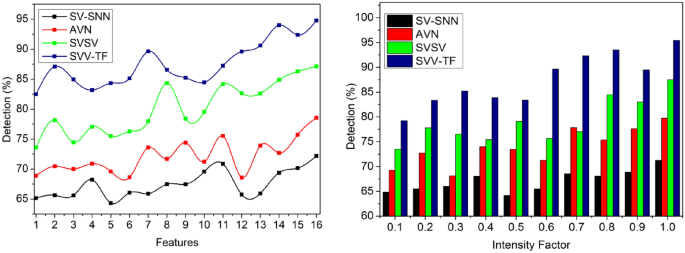

Texture detection

The textural features are analyzed and matched to improve handwriting signature quality for precise Identification; in this scheme, we detect the spatial variations and different pixel intensities based on the identification point illustrated in Fig. 11. Such that \(N<{H}^{image}\) is to satisfy all the digital and handwritten signatures from the input device that can be verified at any time \(VT,\) and a false positive occurs due to identifying spatial variations and pixel intensities in the pursuing signature.

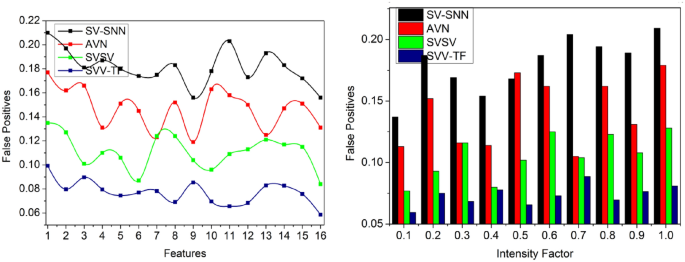

False positives

This proposed scheme detects the variations in pixel intensities using the textural feature extraction performed in the given input signature to prevent false factors at different time intervals. The verification of signature and identification point for the individuals from the texture feature matching output and then \(N={H}^{image}\), \(N>{H}^{image}\) and \(N<{H}^{image}\) is computed using precise spatial variation and pixel intensity identification for time requirements. For instance, it achieves fewer false positives, as presented in Fig. 12.

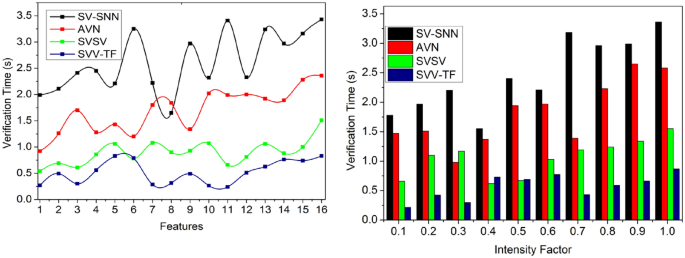

Verification time

It helps to satisfy less verification time for the pixel intensities and feature matching process compared to the other factors, as represented in Fig. 13. The pixel intensities are recurrently analyzed to match the process control systemâs textural features and identification points. Based on the CNN, generating new identification points is performed to select the maximum matching feature for varying pixel intensity and analyzed for improving identification point detection. The handwriting verification process is similar for all the writers irrespective of \(N\) and \(VT\).

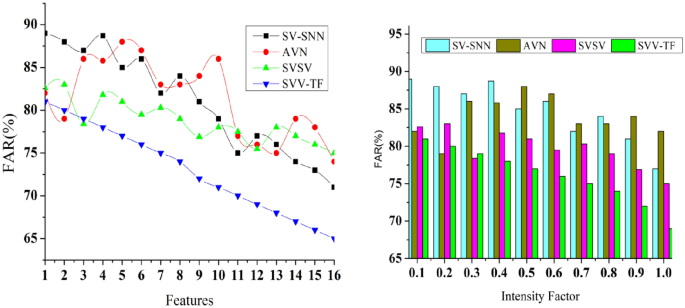

False acceptance rate (FAR)

In biometrics and authenticating systems, the false acceptance rate metric (FAR) is often calculated to assess the rate at which the system wrongly accepts an impostorâs effort as a valid user. The total number of incorrect acceptances or signature matches called the total number of instances the system accepted an impostorâs exertion wrongly. The overall number of fraudulent efforts as the entire number of fraudulent tries.

A high FAR in Fig. 14 implies that the system is accepting an unusually large number of unauthorised or fraudulent attempts is calculated using Eq. (12).

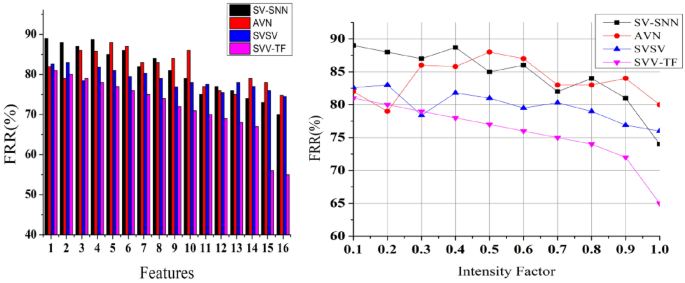

False rejection rate (FRR) analysis

The FRR is an important indicator that indicates the systemâs ability to accept valid signatures accurately. A minimal FRR is beneficial since it suggests that valid users are rarely rejected by the system.If the system wrongly rejects a real signature, it is considered a false rejection. A high FRR in Fig. 15, implies that the system is wrongly refusing many legitimate signatures, thus can be aggravating for users using Eq. (13). It is critical to evaluate and analyse FRR on a regular basis to guarantee that the signature authentication method provides an optimal user experience while preserving security.

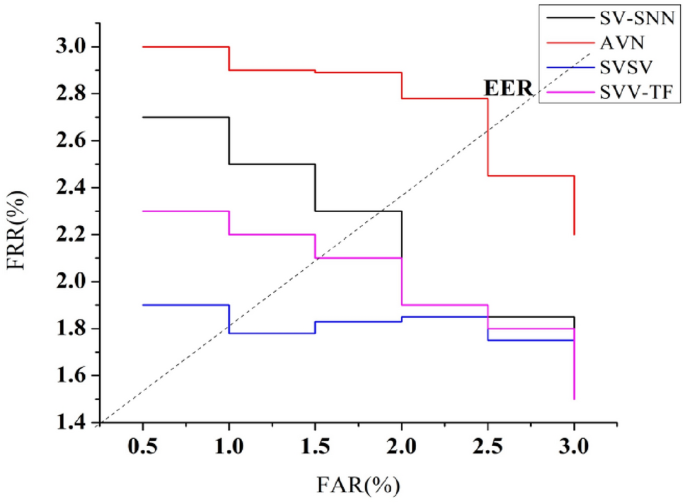

Equal error rate (EER)

The EER depicted in Fig. 16 is the region on the receiver operating characteristic curve in which the FAR and FRR are identical. The EER is a significant indicator since it reflects the operational point where the systemâs efficiency strikes a balance between the danger of accepting an impostor wrongly and the risk of denying a legitimate user improperly. The EER is the ROC curve point at which FAR equals FRR.A lesser EER reflects a greater degree of precision system alongside fewer errors.

The above discussion of the comparative analysis is briefed in Tables 4 and 5, respectively, for features and intensity factors.

Improvements

The metrics accuracy, precision, and detection are leveraged by 12.29%, 12.65%, and 15.5% in order. The metrics false positives and verification time are less than 13.01% and 10.99%. The accuracy, measures the overall correctness of the predictions made by each model. SVV-TF demonstrates the highest accuracy at 95.587%, indicating that it has the highest rate of correct predictions. Precision, the next metric, reflects the ratio of true positives to the combined total of true and false positives. SVV-TF also excels with a precision of 0.9644%, indicating that it has the lowest rate of false positives among all the models. Detection rate, evaluates the modelsâ ability to identify instances of interest accurately. SVV-TF leads with a detection rate of 94.785%, signifying its proficiency in correctly detecting the relevant instances. False positives, represent instances incorrectly predicted as positive. SVV-TF exhibits the lowest false positive rate at 0.0586%, indicating that it makes the fewest mistakes. The verification time measures how long each model takes to perform its task. SVV-TF is the quickest, taking only 0.831 s.

Improvements

The metrics accuracy, precision, and detection are leveraged by 12.14%, 12.85%, and 15.92% in order. The metrics false positives and verification time are less than 9.1% and 10.85%. SVV-TF makes accurate predictions more consistently than the other models. Precision, another crucial metric, signifies the ratio of true positives to the combined total of true and false positives. SVV-TF takes the lead with a precision of 0.9579%, highlighting its exceptional ability to minimize false positives. Detection rate, assesses the modelsâ proficiency in identifying instances of interest. Here, SVV-TF demonstrates remarkable performance with a detection rate of 95.443%, indicating its superior capacity to detect relevant instances accurately. False positives, representing instances incorrectly predicted as positive, are kept impressively low by SVV-TF at 0.081%. This further underscores its precision and effectiveness in making accurate predictions. The verification time measures each modelâs time to perform its task. In this regard, SVV-TF is the quickest, requiring only 0.869 s.

Conclusion

Handwriting Writers or writing Identification is figuring out who wrote a paper by analysing handwriting, text, and images. It has shown promise in many areas, such as digital forensics, crime investigations, finding out who wrote experienced papers, etc. Itâs difficult to determine who wrote the text when the image is complicated, especially when there are different types of handwriting. The data are collected from the signature verification Kaggle34 and the handwritten gender dataset35. Offline signature verification or biometric signature verification works with scanned signatures, and online signature verification works with videos of the writing process. Compared to classical biometric-based handwritten signature identification is less accurate, and security issues will arise.

The proposed artificial intelligence and textural features attain high accuracy in handwriting identification. If the pixel intensities do not match the available textural features, the second layer is repeated from the new spatial variation for new pattern recognition. It enhances the accuracy of the varying feature inputs and training images. The metrics accuracy, precision, and detection are leveraged by 12.29%, 12.65%, and 15.5%. The metrics false positives and verification time are less than 13.01% and 10.99%. To guide future research, several promising avenues are suggested. These include extending the modelâs capabilities to handle multiple languages, exploring advanced feature extraction methods, and incorporating temporal aspects of handwriting.

Additionally, thereâs a recommendation to investigate online handwriting recognition and develop techniques for detecting forged or fraudulent handwriting. Transfer learning and domain adaptation techniques could be explored to adapt the model to different handwriting styles. Efficiency and scalability for real-world deployment, as well as considering security and privacy concerns, are emphasized. Lastly, establishing standardized benchmarks and evaluation metrics would facilitate fair comparisons between different handwriting identification and verification approaches. These directions hold the potential for significant application advancements related to authentication, security, and accessibility.

Data availability

All data generated or analysed during this study are included in this published article.

References

Faundez-Zanuy, M., Brotons-Rufes, O., Paul-Recarens, C. & Plamondon, R. On handwriting pressure normalization for interoperability of different acquisition stylus. IEEE Access 9, 18443â18453 (2021).

Moinuddin, S. M. K., Kumar, S., Jain, A. K. & Ahmed, S. Analysis and simulation of handwritten recognition system. Mater. Today Proc. 47, 6082â6088 (2021).

Najla, A. Q., Khayyat, M. & Suen, C. Y. Novel features to detect gender from handwritten documents. Pattern Recogn. Lett. 171, 201 (2022).

Aouraghe, I., Khaissidi, G. & Mrabti, M. A literature review of online handwriting analysis to detect Parkinsonâs disease at an early stage. Multimedia Tools Appl. 1, 1â26 (2022).

Gross, E. R., Gusakova, S. M., Ogoreltseva, N. V. & Okhlupina, A. N. The JSM-system of psychological and handwriting research on signatures. Autom. Document. Math. Linguist. 54(5), 260â268 (2020).

Melhaoui, O. E. & Benchaou, S. An efficient signature recognition system based on gradient features and neural network classifier. Procedia Comput. Sci. 198, 385â390 (2022).

Heckeroth, J. et al. Features of digitally captured signatures vs pen and paper signatures: Similar or completely different? Forens. Sci. Int. 318, 110587 (2021).

Tsourounis, D., Theodorakopoulos, I., Zois, E. N. & Economou, G. From text to signatures: Knowledge transfer for efficient deep feature learning in offline signature verification. Expert Syst. Appl. 189, 116136 (2022).

Semma, A., Hannad, Y., Siddiqi, I., Lazrak, S. & Kettani, M. E. Y. E. Feature learning and encoding for multi-script writer identification. Int. J. Document Anal. Recogn. 25(2), 79â93 (2022).

Rahman, A. U. & Halim, Z. Identifying dominant emotional state using handwriting and drawing samples by fusing features. Appl. Intell. 1, 1â17 (2022).

Xie, L., Wu, Z., Zhang, X., Li, Y. & Wang, X. Writer-independent online signature verification based on 2D representation of time series data using triplet supervised network. Measurement 197, 111312 (2022).

Naz, S., Bibi, K. & Ahmad, R. DeepSignature: Fine-tuned transfer learning based signature verification system. Multimedia Tools Appl. 1, 1â10 (2022).

Houtinezhad, M. & Ghaffari, H. R. Offline signature verification system using features linear mapping in the candidate points. Multimedia Tools Appl. 1, 1â33 (2022).

Keykhosravi, D., Razavi, S. N., Majidzadeh, K. & Sangar, A. B. Offline writer identification using a developed deep neural network based on a novel signature dataset. J. Amb. Intell. Hum. Comput. 1, 1â17 (2022).

Batool, F. E. et al. Offline signature verification system: A novel technique of fusion of GLCM and geometric features using SVM. Multimedia Tools Appl. 1, 1â20 (2020).

Li, H., Wei, P. & Hu, P. AVN: An adversarial variation network model for handwritten signature verification. IEEE Trans. Multimedia 24, 594â608 (2021).

Ma, C. et al. A feature fusion sequence learning approach for quantitative analysis of tremor symptoms based on digital handwriting. Expert Syst. Appl. 203, 117400 (2022).

Zhao, B. et al. Deep imitator: Handwriting calligraphy imitation via deep attention networks. Pattern Recogn. 104, 107080 (2020).

Yang, B., Zhang, Y., Liu, Z., Jiang, X. & Xu, M. Handwriting posture prediction based on unsupervised model. Pattern Recogn. 100, 107093 (2020).

Al-Haija, Q. A. Leveraging ShuffleNet transfer learning to enhance handwritten character recognition. Gene Expr. Patterns 45, 119263 (2022).

Ruiz, V., Linares, I., Sanchez, A. & Velez, J. F. Offline handwritten signature verification using compositional synthetic generation of signatures and Siamese Neural Networks. Neurocomputing 374, 30â41 (2020).

Xue, G., Liu, S., Gong, D. & Ma, Y. ATP-DenseNet: A hybrid deep learning-based gender identification of handwriting. Neural Comput. Appl. 33(10), 4611â4622 (2021).

Hamdi, Y., Boubaker, H. & Alimi, A. M. Data augmentation using geometric, frequency, and beta modeling approaches for improving multi-lingual online handwriting recognition. Int. J. Document Anal. Recogn. 24(3), 283â298 (2021).

Bouibed, M. L., Nemmour, H. & Chibani, Y. SVM-based writer retrieval system in handwritten document images. Multimedia Tools Appl. 81(16), 22629â22651 (2022).

Alpar, O. Signature barcodes for online verification. Pattern Recogn. 124, 108426 (2022).

Maruyama, T. M., Oliveira, L. S., Britto, A. S. & Sabourin, R. Intrapersonal parameter optimization for offline handwritten signature augmentation. IEEE Trans. Inf. Forens. Secur. 16, 1335â1350 (2020).

Zenati, A., Ouarda, W. & Alimi, A. M. SSDIS-BEM: A new signature steganography document image system based on beta elliptic modeling. Eng. Sci. Technol. 23(3), 470â482 (2020).

Wei, Z., Yang, S., Xie, Y., Li, F. & Zhao, B. SVSV: Online handwritten signature verification based on sound and vibration. Inf. Sci. 572, 109â125 (2021).

Cadola, L. et al. The potential of collaborative learning as a tool for forensic students: Application to signature examination. Sci. Justice 60(3), 273â283 (2020).

Houtinezhad, M. & Ghaffary, H. R. Writer-independent signature verification based on feature extraction fusion. Multimedia Tools Appl. 79(9), 6759â6779 (2020).

Zhou, W., Liu, M. & Xu, Z. The dual-fuzzy convolutional neural network to deal with handwritten image recognition. IEEE Trans. Fuzzy Syst. 30(12), 5225â5236 (2022).

Ponce-Hernandez, W., Blanco-Gonzalo, R., Liu-Jimenez, J. & Sanchez-Reillo, R. Fuzzy vault scheme based on fixed-length templates applied to dynamic signature verification. IEEE Access 8, 11152â11164 (2020).

Abdul-Haleem, M. G. Offline handwritten signature verification based on local ridges features and haar wavelet transform. Iraqi J. Sci. 1, 855â865 (2022).

https://www.kaggle.com/datasets/robinreni/signature-verification-dataset.

Funding

This work is funded by the higher education teaching reform research and practice project of Henan Province (2019SJGLX637, 2021SJGLX606); Teaching reform research project of Henan Open University (2021JGXMZ002).

Author information

Authors and Affiliations

Contributions

H.Z. wrote the draft of the manuscript. H.Z., H.L. contributed to data curation, analysis. H.Z. contributed to manuscript revision. All authors approved the submitted version.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhao, H., Li, H. Handwriting identification and verification using artificial intelligence-assisted textural features. Sci Rep 13, 21739 (2023). https://doi.org/10.1038/s41598-023-48789-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-48789-9

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.